Unlock the Secrets: Boost Your PyTorch Neural Network Performance with These Game-Changing Techniques!

Table of Contents

Are you tired of struggling to get your PyTorch neural network to perform at its best? Look no further! In this article, we’re going to explore some innovative techniques that will revolutionize the way you approach neural network development in PyTorch. Get ready to unlock the secrets and take your models to new heights!

The Power of PyTorch

PyTorch has quickly become one of the most popular frameworks for deep learning, and for good reason. Its dynamic computational graph, intuitive API, and extensive library of modules make it a favorite among researchers and practitioners alike. But to truly harness the power of PyTorch, you need to master the art of optimizing neural network performance.

Technique 1: Dynamic Graph Construction

One of the key advantages of PyTorch is its dynamic computation graph, which allows for on-the-fly graph construction and manipulation. By taking advantage of this feature, you can build more flexible and efficient models that adapt to the specific requirements of your tasks. Whether you’re implementing custom layers or experimenting with novel architectures, PyTorch’s dynamic graph construction gives you unparalleled freedom to innovate.

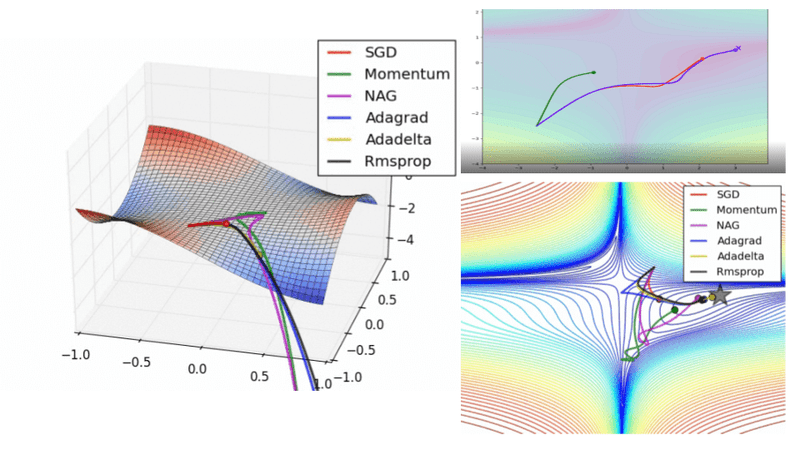

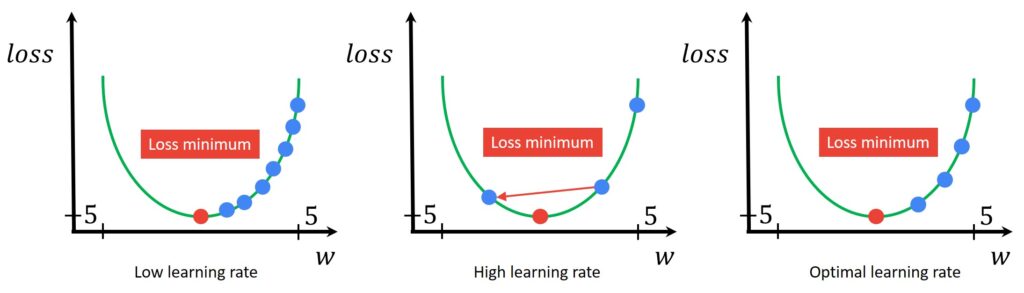

Technique 2: Advanced Optimization Algorithms

PyTorch provides a wide range of optimization algorithms out of the box, but sometimes you need a little extra firepower to squeeze the most out of your models. That’s where advanced optimization techniques come in. Whether you’re exploring second-order methods like L-BFGS or experimenting with meta-learning algorithms like MAML, PyTorch makes it easy to implement and experiment with cutting-edge optimization techniques.

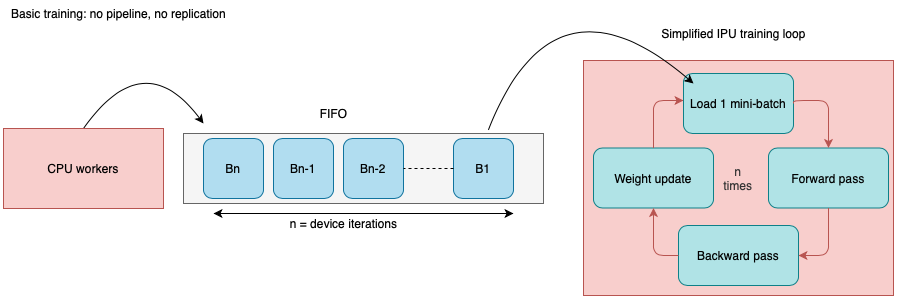

Technique 3: Efficient Data Loading and Preprocessing

In the world of deep learning, data is king. But dealing with large datasets can be a challenge, especially when you’re working with limited computational resources. That’s why efficient data loading and preprocessing techniques are essential for maximizing neural network performance. Whether you’re using PyTorch’s built-in data loading utilities or customizing your own data pipelines, optimizing data ingestion is crucial for ensuring smooth and efficient training.

Technique 4: Model Compression and Quantization

As neural network models continue to grow in size and complexity, the need for efficient model compression techniques becomes increasingly important. PyTorch provides a variety of tools and libraries for compressing and quantizing models, allowing you to reduce memory footprint and inference latency without sacrificing performance. Whether you’re pruning unimportant weights or quantizing activations, PyTorch makes it easy to deploy lightweight models that pack a punch.

Technique 5: Collaborative Model Development

Finally, one of the most powerful techniques for boosting PyTorch neural network performance is collaborative model development. By harnessing the collective intelligence of the PyTorch community, you can leverage pre-trained models, share best practices, and collaborate on cutting-edge research projects. Whether you’re participating in open-source projects, contributing to research papers, or attending PyTorch meetups, collaboration is key to staying at the forefront of deep learning innovation.

Conclusion: Unleash Your PyTorch Potential

With these game-changing techniques in your toolkit, you’re ready to unleash the full potential of your PyTorch neural networks. Whether you’re a seasoned deep learning practitioner or just getting started, these strategies will help you push the boundaries of what’s possible and achieve unprecedented results. So don’t wait – dive in, experiment, and see what amazing things you can create with PyTorch!