Data Loading in Snowflake: Techniques for Efficiently Ingesting Data from Diverse Sources

Table of Contents

In today’s data-driven world, organizations are continually seeking ways to streamline their data management processes. Snowflake, a cloud-based data warehousing platform, has emerged as a preferred choice for many due to its scalability, performance, and ease of use. One critical aspect of utilizing Snowflake effectively is mastering the art of data loading. This article explores various techniques for loading data into Snowflake from diverse sources, including CSV files, JSON files, and external databases.

Introduction to Snowflake Data Loading

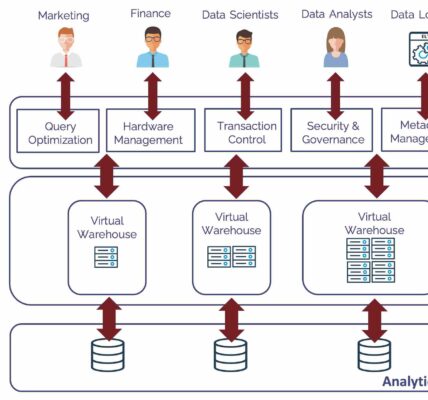

Snowflake offers robust capabilities for ingesting data from multiple sources, enabling organizations to consolidate and analyze vast amounts of data efficiently. Before diving into specific loading techniques, it’s essential to understand Snowflake’s architecture, which comprises three main layers: storage, compute, and services. This separation allows for independent scaling of storage and compute resources, resulting in enhanced flexibility and performance.

Loading Data from CSV Files

Comma-Separated Values (CSV) files are one of the most common data formats encountered in the data ecosystem. Snowflake provides seamless integration for loading CSV files into its platform. The process typically involves the following steps:

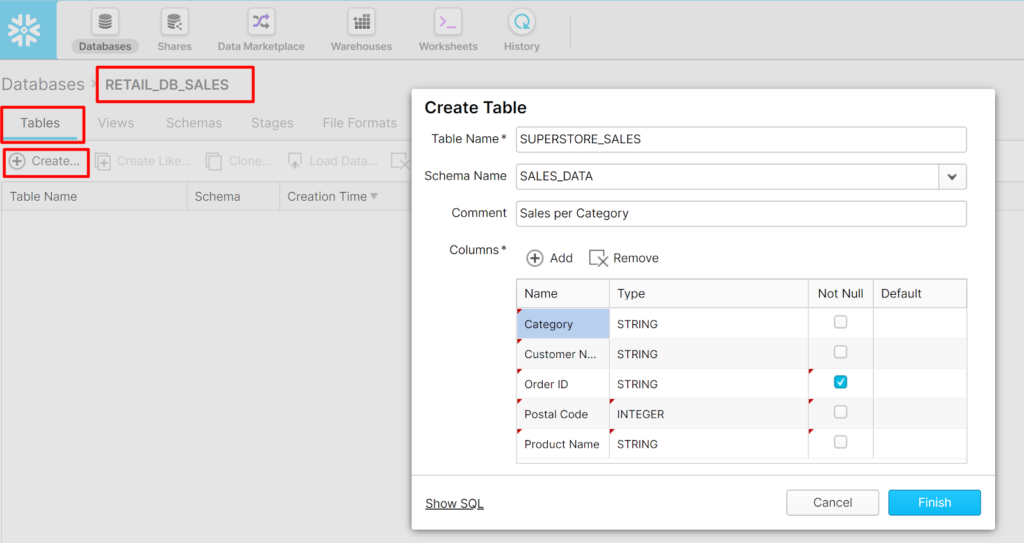

- Staging Area Setup: Create a staging area within Snowflake where CSV files will be temporarily stored before loading into tables.

- File Upload: Upload CSV files to the staging area using Snowflake’s built-in data loading tools or third-party integrations.

- Data Ingestion: Execute a SQL command to copy data from the staged files into Snowflake tables. Snowflake’s COPY INTO command facilitates efficient bulk data loading, leveraging parallelism for faster ingestion.

Loading Data from JSON Files

JSON (JavaScript Object Notation) has become increasingly popular for semi-structured data due to its flexibility and simplicity. Snowflake supports loading JSON data efficiently, with the following steps:

- Staging Area Configuration: Similar to CSV loading, set up a staging area within Snowflake.

- JSON Parsing: Snowflake offers native support for parsing JSON data, allowing users to extract relevant fields and map them to appropriate table columns.

- Data Loading: Utilize Snowflake’s COPY INTO command or Snowpipe, a continuous data ingestion service, to load JSON data into target tables. Snowpipe offers real-time data loading capabilities, ideal for streaming data scenarios.

Loading Data from External Databases

Many organizations store data in external databases such as Oracle, SQL Server, or MySQL. Snowflake provides seamless connectivity to these databases, enabling efficient data loading through various methods:

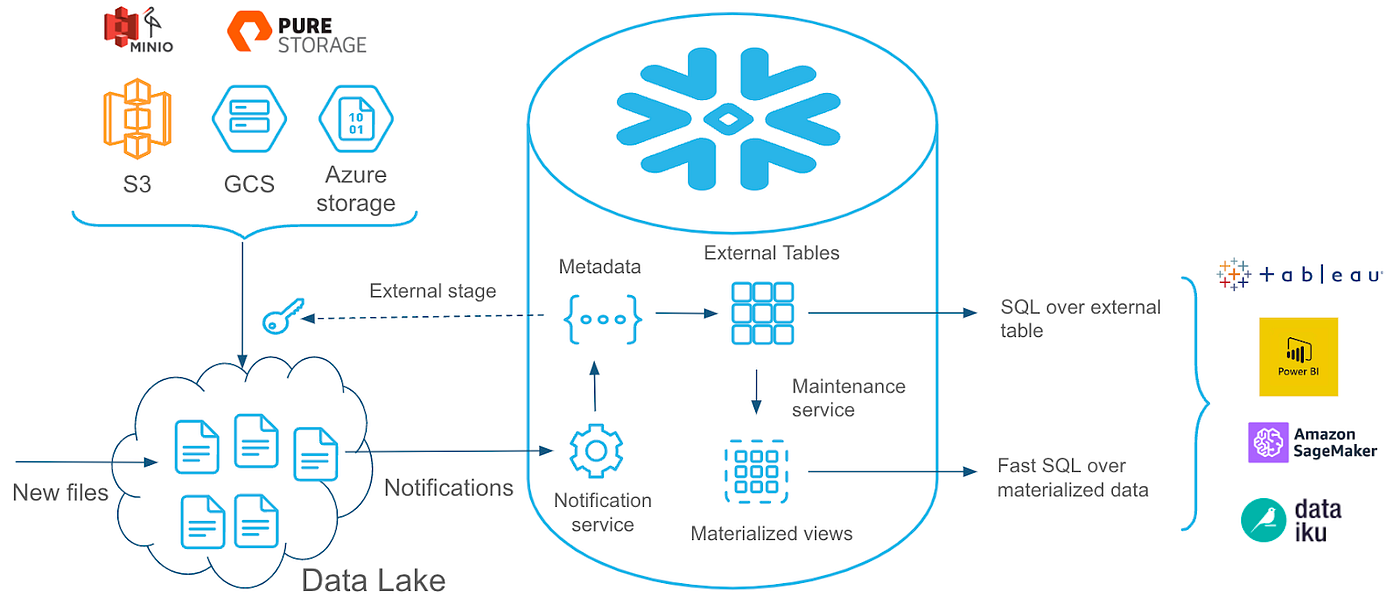

- External Tables: Snowflake’s external table feature allows users to define virtual tables that reference data stored in external databases. This approach eliminates the need for data duplication and enables querying external data directly within Snowflake.

- Data Replication: For scenarios requiring periodic data synchronization, Snowflake supports data replication solutions that capture changes from external databases and replicate them into Snowflake tables.

- ETL Integration: Integrate Snowflake with popular Extract, Transform, Load (ETL) tools such as Informatica, Talend, or Matillion to streamline data loading from external databases.

Best Practices for Efficient Data Loading

Regardless of the data source, adhering to best practices can optimize the data loading process in Snowflake:

- Use Bulk Loading: Whenever possible, leverage Snowflake’s bulk loading capabilities for faster data ingestion.

- Partitioning and Clustering: Utilize partitioning and clustering strategies to optimize query performance and minimize storage costs.

- Data Compression: Apply appropriate data compression techniques to reduce storage footprint and enhance query performance.

- Monitor and Tune: Continuously monitor data loading processes and performance metrics, fine-tuning configurations as needed to maintain optimal efficiency.

Conclusion

Efficient data loading is fundamental to maximizing the value of Snowflake as a data warehousing platform. By leveraging the techniques outlined in this article, organizations can seamlessly ingest data from various sources, empowering data-driven decision-making and insights generation. Whether it’s CSV files, JSON files, or external databases, Snowflake offers robust capabilities for handling diverse data ingestion requirements, positioning itself as a leading solution for modern data management challenges.